This describes a typical limited tender process using standard methods of price / quality measurement, with a pricing ratio set at 50%. It demonstrates that this scoring ratio will almost certainly result in the cheapest price winning the project, even with a very low quality score.

The sample scores used to test this model is as follows:

| Bidder Name | Fee (£) | Quality Score (Out of 100%) |

|---|---|---|

| Practice A | 102,450 | 82 |

| Practice B | 78,000 | 75 |

| Practice C | 125,150 | 85 |

| Practice D | 98,500 | 68 |

| Practice E | 25,000 | 25 |

| Practice F | 107,000 | 76 |

Note that the scoring for Practice E has deliberately been set very low, scoring just 25% for quality but also coming in at less than a third of the next cheapest bid. Unfortunately, such wild variations in price scoring are not unusual when bidding for public sector work. There are few other sectors where any sensible person would accept a tender which was so much lower than the broad average of others; yet, for architectural services, such low-ball bidding is common—and rarely rejected, despite the Public Contract Regulations allowing commissioning bodies to reject “abnormally low” bids. Given that architectural salaries are broadly similar, the only explanation for low fees is that the bidding practice is anticipating spending far less time working on the project than others. There are no innovations in the market which enable practices to significantly reduce the cost of delivering their services without reducing amount of time spent performing it, and therefore the quality of the design which derives from these efforts.

For the purpose of this exercise, the most expensive practice has also scored the highest for quality. This is useful to demonstrate how different scoring methods can achieve a reasonable balance between quality and price, delivering best value for the client.

The following sections explore different methods of scoring and, using the figures above, illustrates how different ratios and scoring methods result in very different outcomes.

Relative to Cheapest Method of Scoring

In our example, the lowest financial bid was £25,000, and the highest £125,150. Scoring was based on a quality / cost ratio of 50:50.

The highest quality score was 85% which, when adjusted to the quality ratio of 50%, results in a quality component of 42.5%.

Using this method of scoring, Practice E (the cheapest) is the winning bidder. Clearly, any practice securing work with a fee of less than a third of the nearest bidder is either going to be unable to service the project properly or will be making a significant loss. Nobody in their right mind would accept such a low tender from, say, a builder, as clearly the quality of the work would be commensurately poor. Yet this happens all the time when it comes to commissioning architectural services.

| Ranking | Bidder Name | Fee (£) | Price Score (%) (max. 50.00) | Quality Score (%) (max. 50.00) | Total Score (%) |

|---|---|---|---|---|---|

| 1 | Practice E (WINNER) | 25,000 | 50.00 | 12.50 | 62.50 |

| 2 | Practice B | 78,000 | 16.03 | 37.50 | 53.53 |

| 3 | Practice A | 102,450 | 12.20 | 41.00 | 53.20 |

| 4 | Practice C | 125,150 | 9.99 | 42.50 | 52.49 |

| 5 | Practice F | 107,000 | 11.68 | 38.00 | 49.68 |

| 6 | Practice D | 98,500 | 12.69 | 34.00 | 46.69 |

Out of interest, let’s test the same figures using an alternative ratio: 70% quality and 30% price. This gives us the following results:

| Ranking | Bidder Name | Fee (£) | Price Score (%) (max. 30.00) | Quality Score (%) (max. 70.00) | Total Score (%) |

|---|---|---|---|---|---|

| 1 | Practice C (WINNER) | 125,150 | 5.99 | 59.50 | 65.49 |

| 2 | Practice A | 102,450 | 7.32 | 57.40 | 64.72 |

| 3 | Practice B | 78,000 | 9.62 | 52.50 | 62.12 |

| 4 | Practice F | 107,000 | 7.01 | 53.20 | 60.21 |

| 5 | Practice D | 98,500 | 7.61 | 47.60 | 55.21 |

| 6 | Practice E | 25,000 | 30.00 | 17.50 | 47.50 |

This result isn’t ideal either, as now the most expensive bidder has won the day, with a quality score that’s only marginally higher than the nearest competitor, but a pricing score which is a fifth higher.

Perhaps this suggests that the relative to cheapest method of scoring is never the best one to use?

Relative to Best Method of Scoring

An alternative way of assessing quality is to award all of the available quality points to the best submission. Having established a shortlist of what are, presumably, the most capable qualifying competitors on the market, it is nonsensical that the cheapest price tender receives the full 50% of the price score, but the best submission does not receive the full 50% of the available points for quality.

It may be that assessors have already given the best submission the full available score for quality, but if not, this method assesses all quality scores relative to the maximum percentage available, as well as giving the maximum marks for price to the cheapest bid. In other words, the best quality submission receives the whole 50% available, with all the remaining scores calculated proportionately to this.

It goes some way to preventing the cheapest bid “buying” a project with an inferior submission accompanied by an abnormally low financial submission—but does it ensure that the client is receiving the best value for money?

In this example, and using the same 50:50 ratio, Practice E still wins, having scored 50.00% for price and 14.71% for quality. So, pursuing this method doesn’t seem to make much difference.

| Ranking | Bidder Name | Fee (£) | Price Score (%) (max. 50.00) | Quality Score (%) (max. 50.00) | Total Score (%) |

|---|---|---|---|---|---|

| 1 | Practice E (WINNER) | 25,000 | 50.00 | 14.71 | 64.71 |

| 2 | Practice A | 102,450 | 12.20 | 48.24 | 60.44 |

| 3 | Practice B | 78,000 | 16.03 | 44.12 | 60.14 |

| 4 | Practice C | 125,150 | 9.99 | 50.00 | 59.99 |

| 5 | Practice F | 107,000 | 11.68 | 44.71 | 56.39 |

| 6 | Practice D | 98,500 | 12.69 | 40.00 | 52.69 |

Mean Narrow Average Method of Scoring

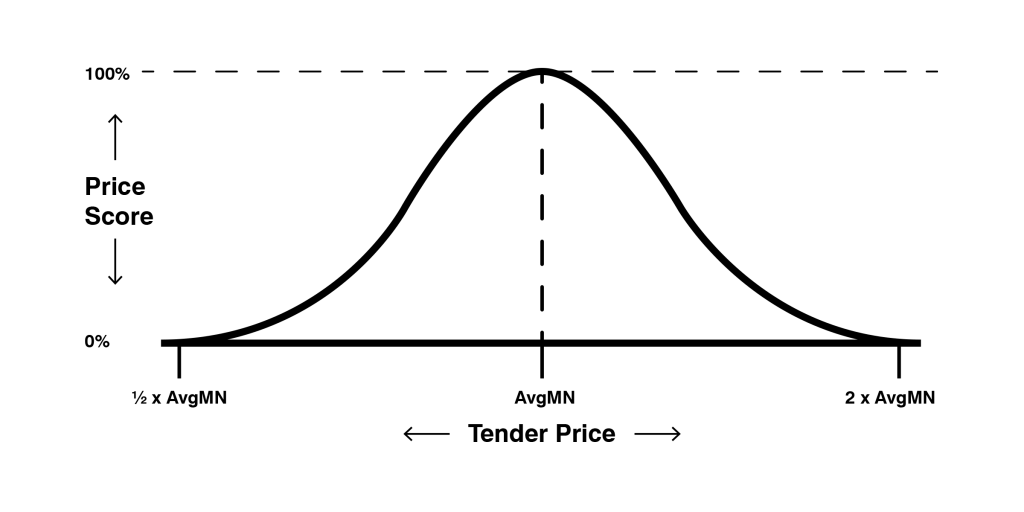

The mean narrow average (MNA) method of scoring discounts the highest and lowest tenders, establishing the mean value of those that remain, and scores all tender prices against the closest to that mean value. Fee bids which are less than half, or more than double, the mean value receive a price score of zero.

With Mean Narrow Average scoring, bidders are compelled to identify the appropriate fee required to service the project rather than cutting prices to buy the job, which could lead to underperformance or claims for additional fees later in the programme. Excessively low—or high—fees are penalised.

For these pricing figures, the mean (average) bid, including the lowest and highest fee submission, was £89,350, and the median was £100,475.

The highest and lowest fee bids have been excluded when calculating the mean average.

Using Mean Narrow Average with a price ratio of 50% results in Practice A being the winning bidder. Intuitively, that seems like a reasonable result: Practice A scored very close the median score (there were two more expensive bids, and three cheaper ones), and scored second highest in terms of quality. The full rankings are as follows:

| Ranking | Bidder Name | Fee (£) | Price Score (%) (max. 50.00) | Quality Score (%) (max. 50.00) | Total Score (%) |

|---|---|---|---|---|---|

| 1 | Practice A (WINNER) | 102,450.00 | 46.91 | 41.00 | 87.91 |

| 2 | Practice D | 98,500.00 | 48.96 | 34.00 | 82.96 |

| 3 | Practice F | 107,000.00 | 44.55 | 38.00 | 82.55 |

| 4 | Practice B | 78,000.00 | 40.42 | 37.50 | 77.92 |

| 5 | Practice C | 125,150.00 | 35.15 | 42.50 | 77.65 |

| 6 | Practice E | 25,000.00 | 0.00 | 12.50 | 12.50 |

Alternative Ratios

To test a few alterative scenarios, I’ve run the same figures as above, but using different price/quality ratios. In most cases, the outcome is the same: Practice A wins, right up to the point where price comprises just 10%. Then, the highest scoring quality submission—and the most expensive bid—is the one that’s successful.

This means that the use of Mean Narrow Average is probably best deployed with a quality/cost ratio of around 60% – 70%.

Quality: 60%, Price: 40%

| Ranking | Bidder Name | Fee (£) | Price Score (%) (max. 40.00) | Quality Score (%) (max. 60.00) | Total Score (%) |

|---|---|---|---|---|---|

| 1 | Practice A (WINNER) | 102,450.00 | 37.53 | 49.20 | 86.73 |

| 2 | Practice F | 107,000.00 | 35.64 | 45.60 | 81.24 |

| 3 | Practice D | 98,500.00 | 39.17 | 40.80 | 79.97 |

| 4 | Practice C | 125,150.00 | 28.12 | 51.00 | 79.12 |

| 5 | Practice B | 78,000.00 | 32.34 | 45.00 | 77.34 |

| 6 | Practice E | 25,000.00 | 0.00 | 15.00 | 15.00 |

Quality: 70%, Price: 30%

| Ranking | Bidder Name | Fee (£) | Price Score (%) (max. 30.00) | Quality Score (%) (max. 70.00) | Total Score (%) |

|---|---|---|---|---|---|

| 1 | Practice A (WINNER) | 102,450.00 | 28.15 | 57.40 | 85.55 |

| 2 | Practice C | 125,150.00 | 21.09 | 59.50 | 80.59 |

| 3 | Practice F | 107,000.00 | 26.73 | 53.20 | 79.93 |

| 4 | Practice D | 98,500.00 | 29.37 | 47.60 | 76.97 |

| 5 | Practice B | 78,000.00 | 24.25 | 52.50 | 76.75 |

| 6 | Practice E | 25,000.00 | 0.00 | 17.50 | 17.50 |

Quality: 80%, Price: 20%

| Ranking | Bidder Name | Fee (£) | Price Score (%) (max. 20.00) | Quality Score (%) (max. 80.00) | Total Score (%) |

|---|---|---|---|---|---|

| 1 | Practice A (WINNER) | 102,450.00 | 18.76 | 65.60 | 84.36 |

| 2 | Practice C | 125,150.00 | 14.06 | 68.00 | 82.06 |

| 3 | Practice F | 107,000.00 | 17.82 | 60.80 | 78.62 |

| 4 | Practice B | 78,000.00 | 16.17 | 60.00 | 76.17 |

| 5 | Practice D | 98,500.00 | 19.58 | 54.40 | 73.98 |

| 6 | Practice E | 25,000.00 | 0.00 | 20.00 | 20.00 |

Quality: 90%, Price: 10%

| Ranking | Bidder Name | Fee (£) | Price Score (%) (max. 10.00) | Quality Score (%) (max. 90.00) | Total Score (%) |

|---|---|---|---|---|---|

| 1 | Practice C (WINNER) | 125,150.00 | 7.03 | 76.50 | 83.53 |

| 2 | Practice A | 102,450.00 | 9.38 | 73.80 | 83.18 |

| 3 | Practice F | 107,000.00 | 8.91 | 68.40 | 77.31 |

| 4 | Practice B | 78,000.00 | 8.08 | 67.50 | 75.58 |

| 5 | Practice D | 98,500.00 | 9.79 | 61.20 | 70.99 |

| 6 | Practice E | 25,000.00 | 0.00 | 22.50 | 22.50 |

Out of interest, what happens is we reverse the ratio to prioritise cost over quality, using the Mean Narrow Average scoring method? Well, here we go:

Quality: 20%, Price: 80%

| Ranking | Bidder Name | Fee (£) | Price Score (%) (max. 80.00) | Quality Score (%) (max. 20.00) | Total Score (%) |

|---|---|---|---|---|---|

| 1 | Practice D (WINNER) | 98,500.00 | 78.33 | 13.60 | 91.93 |

| 2 | Practice A | 102,450.00 | 75.06 | 16.40 | 91.46 |

| 3 | Practice F | 107,000.00 | 71.28 | 15.20 | 86.48 |

| 4 | Practice B | 78,000.00 | 64.67 | 15.00 | 79.67 |

| 5 | Practice C | 125,150.00 | 56.24 | 17.00 | 73.24 |

| 6 | Practice E | 25,000.00 | 0.00 | 5.00 | 5.00 |

Surprisingly (at least to me), Practice A still scores very highly, coming second to Practice D which had a similar, but slightly lower price, but the second-to-bottom quality score. Nobody in their right mind would advocate for the commissioning of architectural services based on such a skewed ratio, but this serves to demonstrate that our earlier conclusion that a quality ratio of between 60% and 70% is likely to yield the best outcome for everyone.

A combination of Mean Narrow Average (MNA) and Relative to Best scoring methods could also be used, i.e. where the price score is calculated as the MNA result with the highest quality score receiving all of the points available, but given the success of the simple MNA method, it’s probably unnecessary.

All of these figures have been generated using a live model which you can test with different figures of your choice, here. And if you’re a procurement officer or public client, try putting so real-life tender figures you’ve received into this too, and see whether the outcome would have been any different.

Addendum

After posting this article on LinkedIn, I’ve been directed to a comprehensive analysis of the various pricing models available to the public sector, written by Rebecca Rees of Trowers & Hamlins, which sets these out far more comprehensively than I could ever hope to do.

You can download the document using the button below.